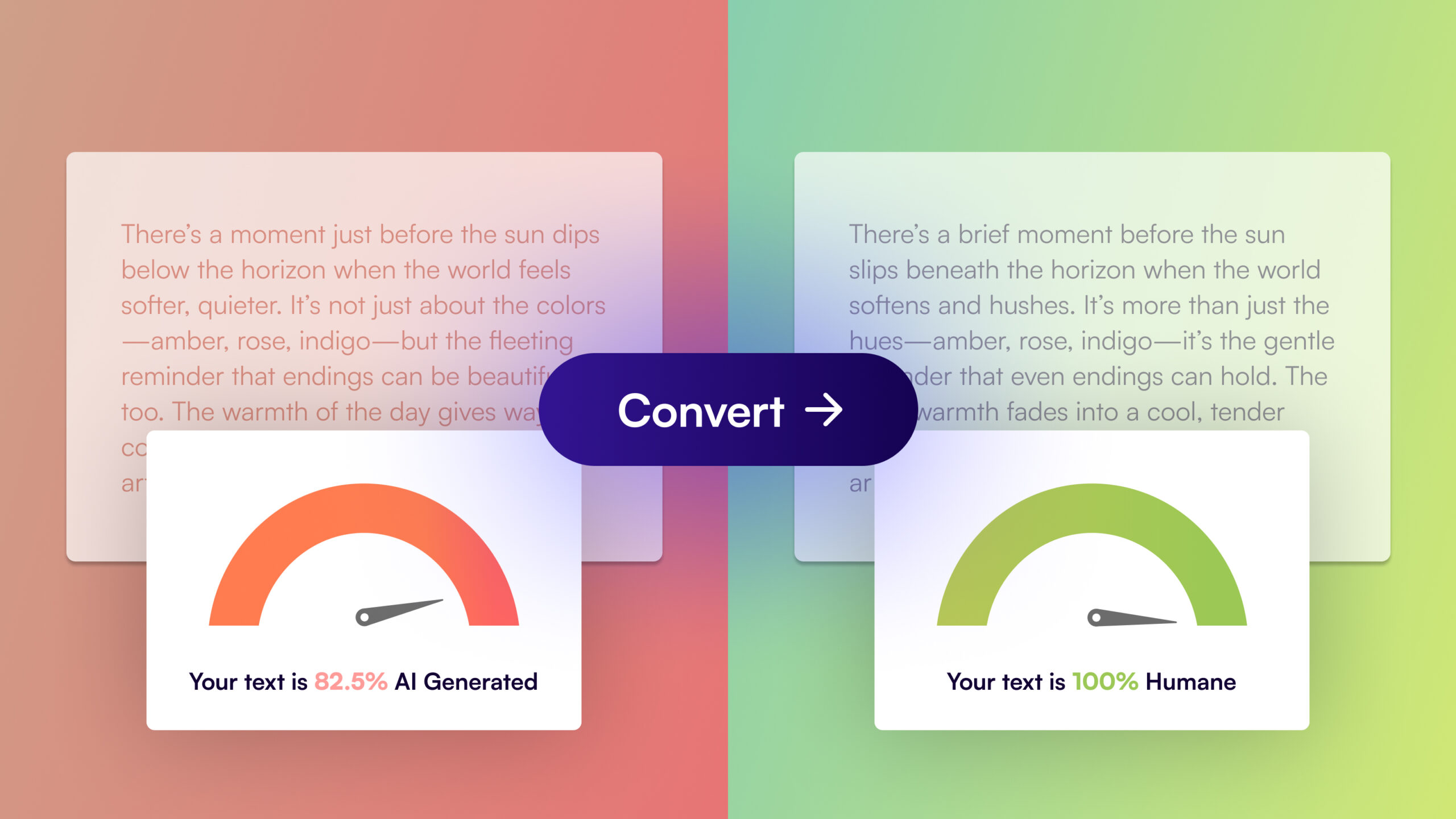

Artificial intelligence detection tools are becoming increasingly common in schools, universities, and professional settings. While their goal is to identify AI-generated content and maintain originality, relying too heavily on these tools can actually pose risks for writers and students alike. Understanding these risks is essential for anyone navigating the modern writing landscape.

Inaccurate Judgments and False Positives

AI detectors are not perfect. They use statistical patterns and algorithms to estimate whether a text is AI-generated, but this can lead to false positives. Human-written content that is clear, well-structured, or formal can sometimes be flagged incorrectly as AI-written. For students, this could result in unfair accusations of plagiarism or cheating, damaging academic reputations without cause.

Stifling Creativity and Writing Development

When writers and students fear AI detection, they may avoid using helpful AI tools that could aid their creativity and learning. Tools like grammar checkers, writing assistants, or AI-based brainstorming aids can enhance writing quality. Overreliance on detection tools may discourage experimentation, leading to less innovative and less confident writing.

Privacy Concerns and Data Security

Many AI detection services collect and store submitted texts for analysis. This raises concerns about privacy and data security, especially when sensitive or personal writing is involved. Writers and students may unknowingly share their original work with third parties, risking misuse or unauthorized distribution.

Ethical Dilemmas and Pressure

Detection tools often create a high-pressure environment. Students may feel forced to hide any use of AI assistance, even when it is allowed or encouraged, leading to ethical confusion. Similarly, writers might feel pressured to “game” the system rather than focus on producing authentic and quality work.

Impact on Educational Fairness

While detection tools aim to promote fairness, they can unintentionally create new inequalities. Students with limited access to human editing help or resources might be unfairly penalized compared to those with professional support who can better disguise AI usage.

No responses yet